🔹Introduction

Kubernetes, an open-source container orchestration platform, has revolutionized the way applications are deployed, scaled, and managed in modern IT environments. One of its key features is the abstraction of services, allowing seamless communication between different components within a cluster. In this article, we'll delve into k8s services, explore their types, and provide practical examples.

Before running into services let's have an idea about application pods and deployments

Key Components:

Deployments in Kubernetes are a higher-level abstraction that simplifies the management and scaling of applications. A Deployment defines the desired state for a set of replicas of a pod and ensures that the current state matches the desired state. It enables declarative updates to applications, allowing easy rollouts and rollbacks.

A pod is the smallest deployable unit in Kubernetes, representing a single instance of a running process or a group of tightly coupled processes. Pods serve as the basic building blocks for applications and encapsulate one or more containers, shared storage, and network resources.

A Service is an abstraction layer that exposes a set of pods as a network service. Services facilitate communication between different parts of an application and provide a stable endpoint, allowing external clients to access the application without needing to know the specific IP addresses of individual pods.

🔹Types of Kubernetes Services:

ClusterIP:

Description: This is the default service type, providing a stable internal IP address reachable only within the cluster.

Other pods within the Kubernetes cluster can access the service using this ClusterIP.

ClusterIP services are not accessible from outside the Kubernetes cluster by default. They are intended for internal communication between different components within the cluster.Example YAML:

yamlCopy codeapiVersion: v1 kind: Service metadata: name: my-clusterip-service spec: selector: app: my-app ports: - protocol: TCP port: 80 targetPort: 8080

NodePort:

Description: Exposes the service on a static port on each node's IP, allowing external access. Kubernetes chooses a port from a predefined range (typically 30000-32767) on each node in the cluster.

When you access any node in your cluster on the allocated port, Kubernetes forwards the traffic to the respective pods that match the service's selector.

If there are multiple nodes in the cluster, Kubernetes performs basic round-robin load balancing between the nodes.Example YAML:

yamlCopy codeapiVersion: v1 kind: Service metadata: name: my-nodeport-service spec: selector: app: my-app ports: - protocol: TCP port: 80 targetPort: 8080 type: NodePort

LoadBalancer:

Description: Exposes the service externally using a cloud provider's load balancer. When you create a LoadBalancer service in Kubernetes, it automatically provisions a load balancer in the cloud provider's environment and distributes incoming traffic across the pods that belong to the service.

Example YAML:

yamlCopy codeapiVersion: v1 kind: Service metadata: name: my-loadbalancer-service spec: selector: app: my-app ports: - protocol: TCP port: 80 targetPort: 8080 type: LoadBalancer

ExternalName:

Description: Maps the service to the contents of the externalName field (e.g., a DNS name).

Example YAML:

yamlCopy codeapiVersion: v1 kind: Service metadata: name: some-external-service spec: externalName: my-company.example.com type: ExternalName

🔹Practical Example

Firstly let's write a simple HTML website which will be hosted on nginx machine.

Step 1: Create a directory named web-app and write index.html file (anything of your choice)

you can also get the code from my github repo: https://github.com/AniketKharpatil/DevOps-Journey/tree/main/k8s/web-app

Write a dockerfile to dockerize the web-app.

# Use NGINX base image

FROM nginx:alpine

# Copy the HTML files to NGINX default public directory

COPY index.html /usr/share/nginx/html/index.html

# Expose port 80

EXPOSE 80

# Command to start NGINX when the container starts

CMD ["nginx", "-g", "daemon off;"]

Step 2: Navigate to the directory containing your Dockerfile and index.html file in your terminal, then run:docker build -t web-app .

Step 3: After building the image, you can run the Docker container:

docker run -d -p 8080:80 web-app

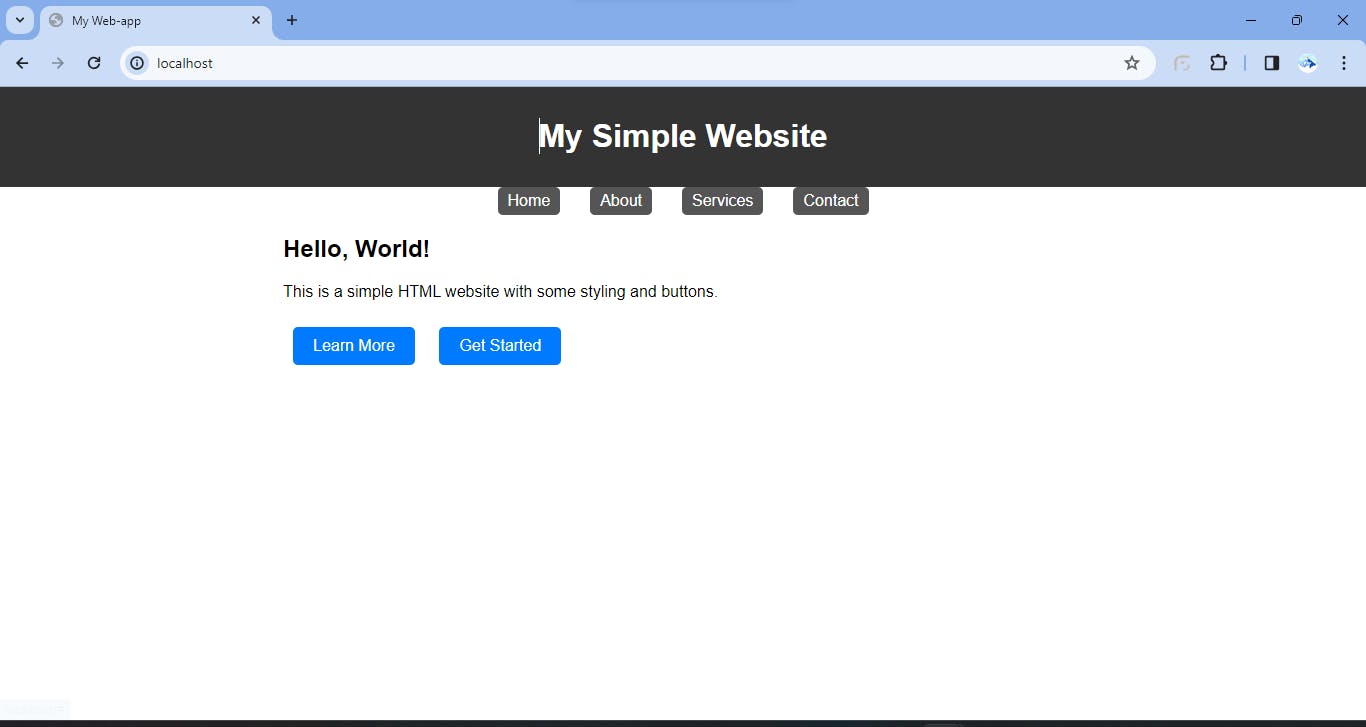

if you see a following website then your dockerimage is correct

Step 4: Create a Kubernetes deployment YAML file

apiVersion: apps/v1

kind: Deployment

metadata:

name: web-app

spec:

replicas: 1

selector:

matchLabels:

app: web-app

template:

metadata:

labels:

app: web-app

spec:

containers:

- name: web-app

image: web-app

imagePullPolicy: IfNotPresent

ports:

- containerPort: 80

Run the commandkubectl apply -f deployment.yaml

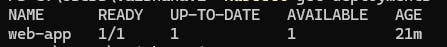

You'll see the deployment being created:kubectl get deployments

also check the pod for itkubectl get pods

Step 5: Deploy the service as NodePort service

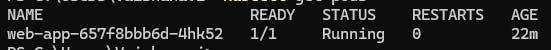

kubectl get svc

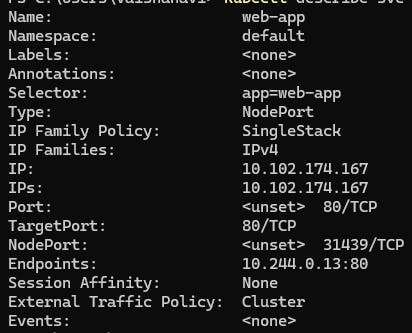

kubectl describe svc web-app

you can see we have got a port 31439 by the kubcetl automatically where our pod running 80 port is forwarded

Access the website here:

https://<<Node-IP>>:<<NodePort>>

I've forwarded the port 80 on my localhost to test it.kubectl port-forward web-app-657f8bbb6d-4hk52 80:80

On cloud environments you should be using the instance publicIP which will be the node's IP in case of NodePort service or directly the LoadBalancer IP in case of a loadbalancer service type.

Here is the output of our web-app:

🔹Conclusion:

Kubernetes services play a crucial role in enabling seamless communication between components in a cluster. Understanding the different service types and their use cases empowers developers to design robust and scalable applications. With the provided examples and YAML files, you can kickstart your journey into harnessing the full potential of Kubernetes services in your containerized applications.

Reference: https://github.com/AniketKharpatil/DevOps-Journey/tree/main/k8s/web-app